This is a brief documentation of how we get our Use Cases to work with Microsoft Azure Cognitive Services.

1. What is Azure?

The Azure cloud platform is more than 200 products and cloud services designed to help you bring new solutions to life — to solve today’s challenges and create the future. Build, run, and manage applications across multiple clouds, on-premises, and at the edge, with the tools and frameworks of your choice.

2. What are Cognitive Services?

Azure Cognitive Services are cloud-based services with REST APIs and client library SDKs available to help build cognitive intelligence into applications. Azure Cognitive Services comprise various AI services that enable to build cognitive solutions that can see, hear, speak, understand, and even make decisions.

2.1. Use Cases

| Use Case | Description |

|---|---|

Corona Limit |

If enough people in one picture are wearing a mask |

Forest Fire |

Uses color distinguishing to determine if there is fire |

Traffic Jam |

Checks how long the traffic jam is |

Animals In Grass |

Checks a field if it is clear of animals |

2.2. How it works

2.2.1. Corona Limit

First, we check if the image has been taken outside. (As it won’t be necessary to check for masks if this is the case.)

val descriptionString : List<String> = json.get("description").toString().split("\"")

for(a in descriptionString){

if(a == tag_legal){

println("Tag: outside party")

isOutside = true

break

}

if(a == tag_crowd || a == tag_group){

println("Tag: failed")

}

}This works by checking the description string for tag_legal (in this case "outdoor"). The description string is generated by Azure after scanning the image and contains every information that it was able to recognize.

Checking the image for masks works in the same manner as the case described above.

if(!isOutside) {

val eachWord: List<String> = descriptionString[7].split(" ")

for (a in eachWord) {

if(a == tag_mask || a == tag_masks){

println("Caption: mask protection")

isOutside = true

break

}

if (a == tag_crowd || a == tag_group) {

println("Caption: failed")

}

}

}Moving on to the most important part for this use case: checking how many people are actually on the picture.

Not only do we count every person detected, we also check if they appear old enough to be required to wear a mask at all.

//faces detect in one picture

val facesList = json.get("faces").toString().split("\"age\":")

var counter = 0

var i = 1

while (i < facesList.size) {

if (facesList[i].subSequence(0, 2).toString().toInt() < age_to_need_tests) {

counter++

}

i++

}

if ((facesList.isNotEmpty() && (facesList.size - 1) > (corona_limit + counter)) || !isOutside) {

println("Faces: corona measures failed")

} else {

println("Persons are under corona_limit: "+(facesList.size-1)+" < "+(corona_limit+counter))

}If there are more people than the custom corona limit allows, the app will send a popup to alarm the user of the risk.

2.2.2. Forest Fire

For detecting forest fires we use an approach that utilizes the difference in colors.

var isSomething : Boolean = false

var colors : List<String> = json.get("color").toString().split("\"dominantColorBackground\"")

if(colors[1].subSequence(2, 5) == tag_red || colors[1].subSequence(2, 6) == tag_grey ||

colors[1].subSequence(2, 8) == tag_yellow || colors[1].subSequence(2, 8) == tag_orange) {

isSomething = true

}The rest works just like the use case mentioned above: By evaluating the description of the image which Azure delivers.

var isSomething : Boolean = false

var colors : List<String> = json.get("color").toString().split("\"dominantColorBackground\"")

if(colors[1].subSequence(2, 5) == tag_red || colors[1].subSequence(2, 6) == tag_grey ||

colors[1].subSequence(2, 8) == tag_yellow || colors[1].subSequence(2, 8) == tag_orange) {

isSomething = true

}

var tagsArray : List<String> = json.get("description").toString().split("\"")

for(a in tagsArray){

if(a == tag_outdoor || a == tag_forest || a == tag_grass || a == tag_tree){

isOutside = true

}

if(a == tag_smoke){

isSmoke = true

}

if(a == tag_fire) {

isFire = true

}

}

var descriptionArray = tagsArray[7]

var listOfCaptions = descriptionArray.split(" ")

for(b in listOfCaptions){

if(b == tag_fire){

isFire = true

break

}

if(b == tag_smoke){

isSmoke = true

break

}

}

if(!isSmoke && !isFire && isSomething)isSmoke = true

if(isSmoke && !isFire)println("Fire is burning down")

else if(isFire)println("Should call fire department->fire is burning and smoking")

else println("Cannot recognise anything")2.3. Implementation in the app

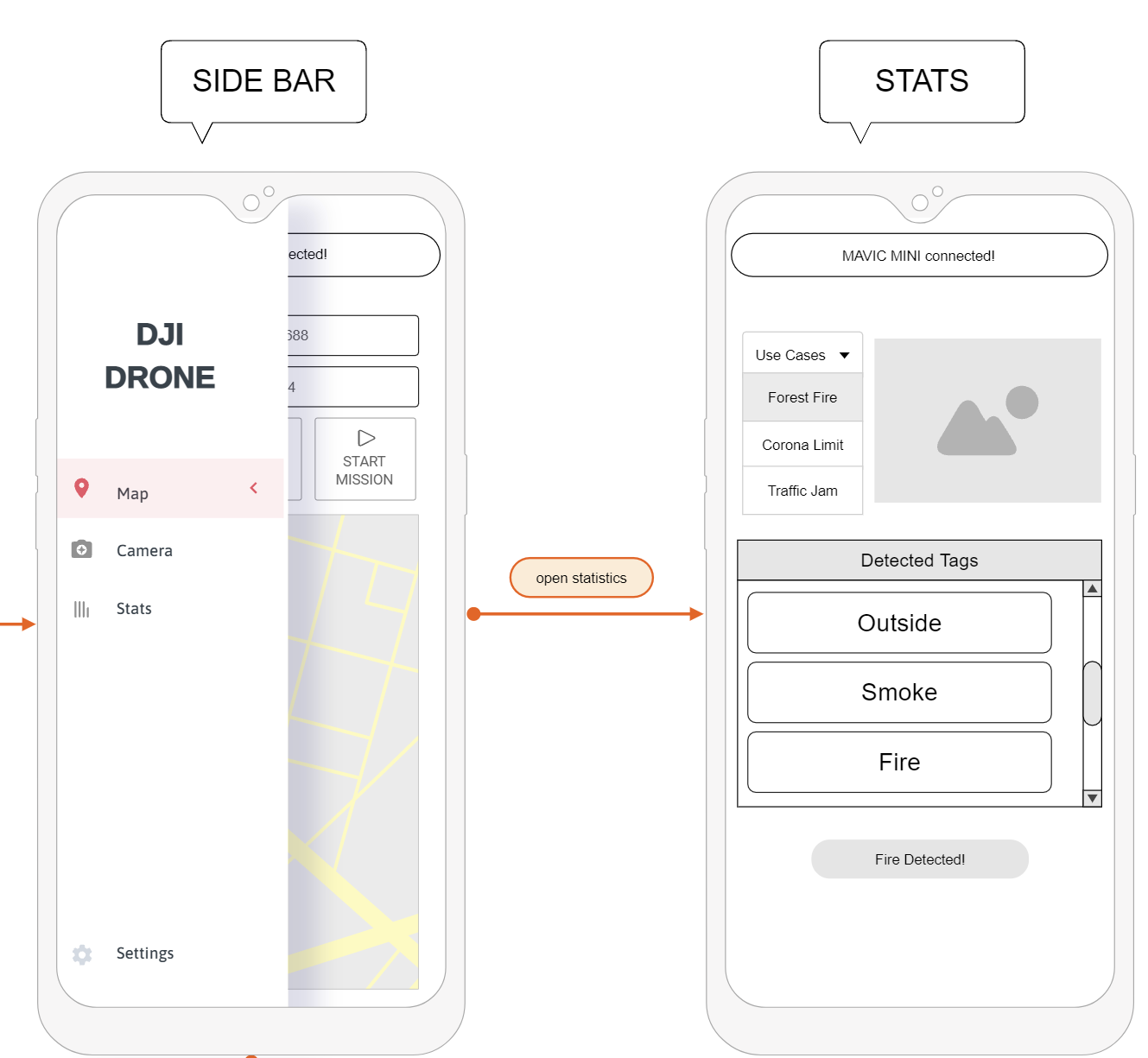

The stat tab is available on the navigation slider. When the specific use case is selected from the drop down, the drone will start taking pictures and scanning them. If the detected tags match the use case, a pop up with a fitting message is displayed.

The images are shown on the right hand side of the use case drawer as soon as they come in.